This page contains some explanations and example Max patches that are intended to give instruction on the control of audio signals using MSP.

These explanations were written for use by students in the Interactive Arts Programming course at UCI, and are made available on the WWW for all interested Max/MSP users and instructors. If you use the text or examples provided here, please give due credit to the author, Christopher Dobrian.

[Click on an image for a text version of the actual Max patch.]

One of the challenges, when designing a complicated audio-processing patch in MSP, is the problem of how to "manage" a collection of different processes. This management includes such issues as a) how to organize the program in such a way as to allow selection of different processes, b) how to keep the level of the audio correct, without clipping, c) how to fade smoothly from one process to another, avoiding clicks and other discontinuities, d) how to turn off processes that are not currently in use, to conserve CPU processing power, and e) conceiving and designing a strategy for selecting processes.

The examples below will show one method (among various possible ones) for addressing these issues.

The first three examples show how to encapsulate a simple audio process as a "subpatch" that can be used in another patch just as if it were a Max/MSP object. An inlet is created for each input signal, and another inlet is created for any commands or control signals that we may want to provide; and an outlet is created for each resultant signal. In this way, an entire audio process can be encapsulated as a subpatch to be used in a larger "main" patch, keeping the main patch organized and uncluttered, and allowing for easy modification of individual processes.

Whenever you turn an audio process on or off, or want to adjust its amplitude level, it's wise to perform a timed fade in or out rather than making an instantaneous change. An instantaneous change will cause a sudden discontinuity in the audio signal that will be perceived as a click or a noise. By controlling the level more gradually with a fade (even in as little as 20 milliseconds or so), you can smooth out such a discontinuity. Thus, the change in amplitude should be done using a control signal rather than a single Max message. The line~ object, which generates a signal that interpolates linearly between two discrete values, is very useful in this regard.

This subpatch, which we call gain1~ (to distinguish it from the built in MSP object gain~), allows us to supply a desired gain (or reduction) of level and a desired fade time as Max messages, and it will execute that fade as a control signal, using line~.

Notice that the subpatch includes arguments #1 and #2 instead of stating an initial gain and fade time. This technique allows one to specify these values as typed-in arguments when using this object in a main patch. The #1 and #2 will be replaced by the first and second typed-in arguments in the main patch.

Mixing audio signals is simply the process of sample-by-sample addition, which you can perform with the +~ object. However, to avoid clipping, you usually need to scale the signals down somewhat when adding. For example, when adding two signals that are each potentially of full amplitude, it would make sense to multiply by 0.5 after adding them.

This mix~ subpatch adds two signals coming in the first two inlets, and allows you to specify the balance between the two. Specifically, it allows you to specify the level of the signal in the second inlet, and it then sets the level of the other signal to 1 minus that, in order that the sum of the gain levels will be 1, thus avoiding clipping.

So, a balance value of 0.5 will give an equal mix of the two input signals. By sending in a continuously changing signal (such as from a line~ object) as the balance value, you can do a crossfade from one signal to the other (0 to 1, or 1 to 0). The mix~ subpatch is thus useful as a simple mixer of two signals, or as a crossfader between two signals.

Note that the subpatch has been written in such a way that the balance value can be supplied as a typed-in argument in the main patch and as a float value in the right inlet, or as a control signal in the right inlet.

This pan~ subpatch takes one signal in the left inlet, and sends it out each of two outlets. The amplitude gain for each outlet is determined by a "panning" value supplied in the right inlet. (Once again, this value can be supplied as a typed-in argument in the main patch, as a float value, or as a control signal.)

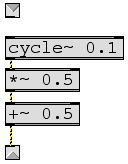

In order to change the panning without changing the over-all intensity of the sound, we need to use the square root of the panning value for each channel as the gain factor. (The reason why this is so is explained in MSP Tutorial 22, as well as in most computer audio textbooks such as Moore's Elements of Computer Music, Roads's The Computer Music Tutorial, or Dodge and Jerse's Computer Music.) A because of a trigonometric identity, the square root of values from 0 to 1 can be found at corresponding locations on the first 1/4 cycle of a sine wave. So rather than do two square root calculations for every audio sample, it's computationally more efficient to "look up" the value by using the panning value as the phase offset on a 0 Hz cycle~ object. (Again, see MSP Tutorial 22 for more on this.)

Now that you've seen some essential audio processing functions encapsulated as subpatches, we'll look at them as they are used in a main patch. This main patch will also be instructive regarding one way to organize multiple audio processes.

In this example we set up a situation in which many different types of audio processing are available, but we select only one at a time. I call this a "parallel, selective" model, in which all processes are in theory running simultaneously in parallel (i.e. side by side), yet only one is selected for activation at any given time.

This patch is designed to allow the user to select one of eight audio processes. (For the sake of simplicity, we only show two of the eight processes.)

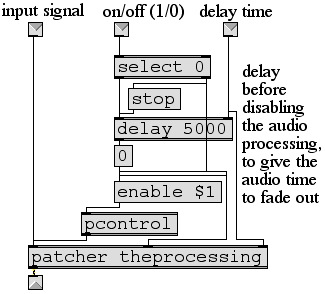

On the left side of the patch, you'll notice that when we turn audio processing on or off, we fade the input signal in or out graudally to avoid a sudden click. When turning audio off, we delay for a time, to allow the sound to fade down before MSP processing is actually stopped. Step through the messages and the structure of the patch and try to understand how it works.

Inside the patcher "process[x]" objects, we use the "enable" message to the pcontrol object to disable the previous process and enable the current process. Again we delay for a time to allow the process to fade out before we disable it.

The actual processing (whatever we decide we want to do to alter the input signal) would take place in the sub-subpatch called patcher "theprocessing". Inside those sub-subpatchers, the on/off and fade time messages can be used to turn the gain of the process up or down when that particular process is turned on or off. This will produce a smooth crossfade between the old and the new process, rather than a sudden click from one process to another.

The patcher "autopanner" subpatch at the bottom could be any patch that generates a control signal to determine the distribution of the mono signal to two stereo speakers. As a simple example, it could just pan continually from side to side over the course of ten seconds, like this.